Microsoft has unveiled Phi-3, a brand-new set of small language models (SLMs) designed to offer top-notch performance at a low cost.

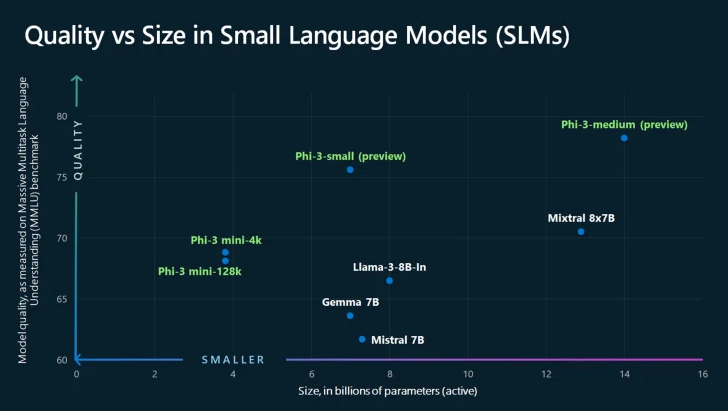

These models excel in understanding language, problem-solving, coding, and math, outperforming others of similar or larger sizes. Phi-3 gives developers and businesses more choices for using AI effectively while keeping expenses in check.

Phi-3 Models: Overview and Availability

Introducing Phi 3-mini, the first model in the Phi 3 lineup. It’s a 3.8-billion-parameter model that’s now available on Azure AI Studio, Hugging Face, and Ollama. Phi-3-mini is all set to use right away, without needing lots of adjustments. Plus, it’s got a big context window of up to 128,000 tokens, making it great for handling large chunks of text without slowing down.

To make sure it works smoothly on different kinds of computers, Phi 3-mini has been fine-tuned for ONNX Runtime and NVIDIA GPUs. And there’s more to come! Microsoft is getting ready to launch Phi 3-small (7 billion parameters) and Phi 3-medium (14 billion parameters) soon. These new models will offer even more choices to fit different needs and budgets. You may also like Microsoft Enhances Tools to Safeguard Election Integrity Worldwide Amid Worries About Generative AI

Phi-3 Model Performance and Evolution

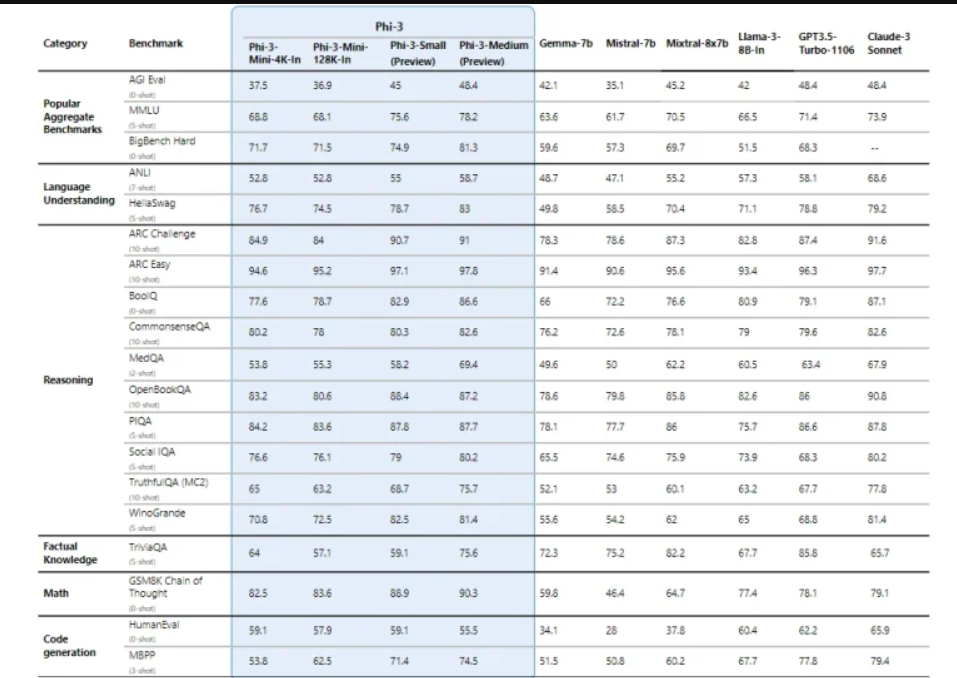

Microsoft shares that the Phi 3 models have shown big improvements in performance compared to models of similar or larger sizes in different tests. For example, Phi 3-mini performs better than models twice its size in understanding and generating language. Also, Phi-3-small and Phi 3-medium have done better than much bigger models like GPT-3.5T in specific assessments. Read also Meta Llama 3: Most Powerful Publicly Accessible LLM Currently Available.

Microsoft says they’ve built the Phi 3 models following their Responsible AI principles. These principles focus on being accountable, transparent, fair, reliable, safe, private, secure, and inclusive. The models have gone through safety checks, evaluations, and red-teaming to make sure they’re deployed responsibly.

Possible Uses and Abilities of Phi-3

The Phi 3 models are built to shine in situations where there are limited resources, fast response times are crucial, or keeping costs down is a must. They can help make AI-powered apps work smoothly on devices with not much computing power, thanks to their ability to do on-device processing.

Since Phi 3 models are smaller, it’s more affordable for businesses to tweak and personalize them for their specific needs without spending too much money. When speed matters, Phi-3 models come to the rescue. They’re designed to give results quickly, making user experiences better and opening up possibilities for AI interactions in real-time.

Phi-3-mini, in particular, is great at thinking logically, making it perfect for tasks like analyzing data and generating insights. As people start using Phi-3 models in real life, it’s clear they have the potential to spark innovation and make AI more accessible to everyone.

With Phi-3, Microsoft is pushing the boundaries of what small language models can do. They’re making it easier for AI to be part of all sorts of apps and devices, bringing us closer to a future where AI is seamlessly integrated into our lives.