As Meta rolls out the latest update for its AI across all platforms, they’ve also released the Llama 3 benchmark for tech fans. This benchmark provides a standard set of tests for independent researchers and developers to assess how well Llama 3 performs on different tasks.

This openness lets users see how Llama 3 stacks up against other similar models using the same benchmark. It helps people get a fair idea of what Llama 3 can do well and where it might need improvement. Read also The Future of AI is Faster: Meta’s Next-Gen MTIA Chip Speeds Up Learning

Table of Contents

What does the Llama 3 benchmark indicate?

Meta AI created the Llama 3 benchmark, which includes different tests to check how well LLMs perform on tasks like answering questions, summarizing, following instructions, and learning with few examples. This benchmark helps compare Llama 3’s strengths and weaknesses with other similar models. Read also Meta is testing its AI chatbot Instagram, WhatsApp, and Messenger in India and Africa Apr 2024.

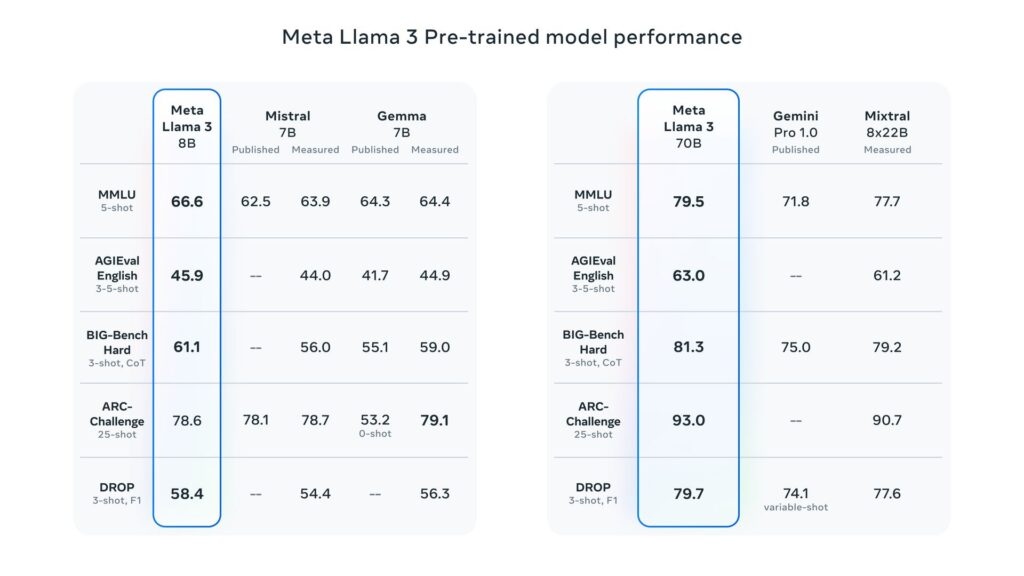

Although it’s tricky to directly compare the its benchmark with those used by competitors because they use different methods, Meta says their Llama models trained on their data did really well on all the tasks they tested. This shows that Meta AI is right up there with the best in the LLM world.

- Parameter scale: Meta claims that their 8-billion and 70-billion parameter Llama models outperform Llama 2, setting a new standard for models of similar size in the LLM field.

- Human evaluation: Meta conducted assessments using real people on a wide-ranging dataset covering 12 important use cases. These tests show that Meta’s 70-billion parameter Llama 3 model, focused on following instructions, performs well in real-life situations compared to similar-sized models from competitors.

- Independent benchmarks may be needed: It’s important to note that these evaluations are done by Meta themselves. To get a clearer picture, it might be necessary to rely on independent benchmarks for a more accurate comparison.

Training details

- Training hardware: Meta used two custom-built clusters, each equipped with an impressive 24,000 GPUs, to train Llama 3.

- Training data: Mark Zuckerberg, Meta’s CEO, mentioned in a podcast interview that the 70-billion parameter model was trained on a huge dataset containing about 15 trillion tokens. It’s interesting to note that the model never reached its peak performance during training, suggesting there’s potential for improvement with even larger datasets.

- Future plans: Meta is currently working on training an enormous 400-billion parameter version of Llama 3. This could place it in the same performance category as competitors like GPT-4 Turbo and Gemini Ultra on benchmarks such as MMLU, GPQA, HumanEval, and MATH.

Fine-Tuning for Instruction and Performance Boost

To make Llama 3 better for chatting and talking, Meta came up with a new way to fine-tune its instructions. They used a mix of different methods like supervised fine-tuning, rejection sampling, proximal policy optimization, and direct preference optimization.

The quality of the questions used in supervised fine-tuning and the rankings used in proximal policy optimization and direct preference optimization were really important for how well the models worked. Meta’s team carefully chose these questions and checked them multiple times to make sure they were right.

Training the model to pick the best answers based on rankings also helped Llama 3 do better at figuring out problems and coding tasks. Meta found that even when the model struggled to give a direct answer to a problem, it could still get the right solution by looking at its thought process. Training it to pick the best answers from these thought processes made it even better.

Challenges Encountered in the Llama 3 Benchmark

- Acknowledging Limitations: It’s important to recognize the constraints of current LLM benchmarks due to issues like contaminated training data and vendors selectively presenting results.

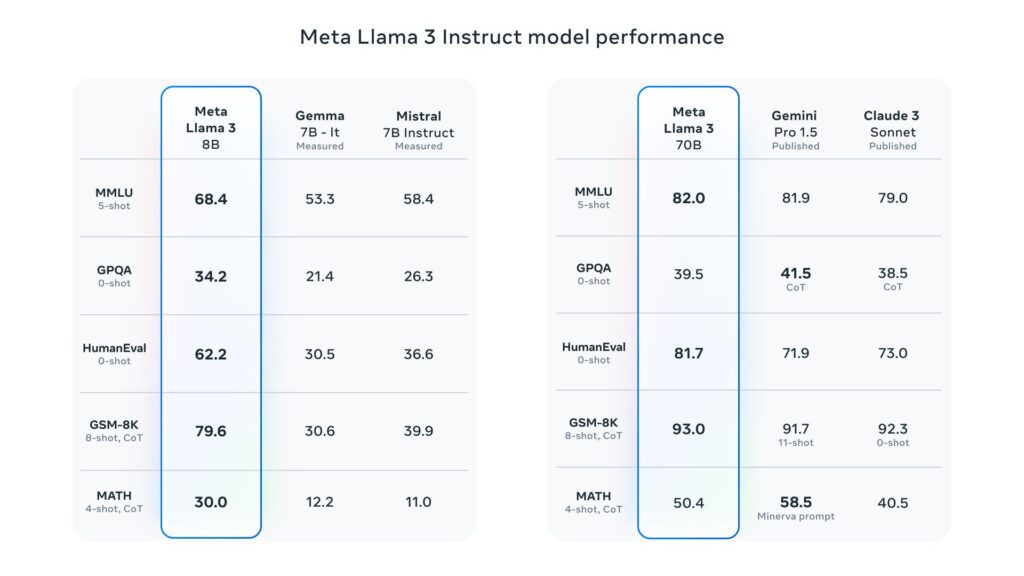

- Meta’s Provided Benchmarks: Despite these challenges, Meta has offered some benchmarks highlighting Llama’s performance on various tasks such as general knowledge (MMLU), math (GSM-8K), coding (HumanEval), advanced questions (GPQA), and word problems (MATH).

- Comparison Results: These benchmarks show that the 8-billion model of Llama 3 compares favorably with similar models from competitors like Google’s Gemma 7B and Mistral 7B Instruct. Similarly, the 70-billion model performs well against established models like Gemini Pro 1.5 and Claude 3 Sonnet.

Meta intends to make Llama 3 models easily accessible on popular cloud platforms such as AWS, Databricks, and Google Cloud. This move ensures that developers have widespread access to the technology.

Llama 3 serves as the core of Meta’s virtual assistant, which will play a prominent role in search features across Facebook, Instagram, WhatsApp, Messenger, and a dedicated website that resembles ChatGPT’s interface, including image generation capabilities. You may also like The Latest NVIDIA Blackwell AI Superchip and Architecture

Furthermore, Meta has teamed up with Google to integrate real-time search results into the assistant, expanding upon their existing collaboration with Microsoft’s Bing.