Microsoft has just published a research paper called “Sparks of Artificial General Intelligence: Early Experiments with ChatGpt 4 AGI.” According to Microsoft:

Table of Contents

This paper reports on our investigation of an early version of GPT-4, when it was still in active development by OpenAI. We contend that (this early version of) GPT-4 is part of a new cohort of LLMs (along with ChatGPT and Google’s PaLM for example) that exhibit more general intelligence than previous AI models.

In this paper, there’s strong evidence showing that GPT-4 goes beyond just memorizing things. It actually has a deep and flexible understanding of different ideas, skills, and areas of knowledge. In fact, its ability to understand and apply concepts is even better than what most humans can do.

Let’s quickly sum up what an ChatGpt 4 AGI system is. Basically, it’s a type of advanced AI that can work across many different areas, not just one specific task. For example, narrow AI might be something like a self-driving car, a chatbot, or a chess-playing AI. These are designed for one particular job. Read also ChatGPT & Enterprise: Finding Balance Between Caution and Innovation in the AI Era

On the other hand, an AGI could switch between tasks and fields easily. It would be able to use different kinds of learning techniques, like transfer learning or evolutionary learning, to adapt to new situations. It could also use older methods, like deep reinforcement learning.

This description of AGI matches what I’ve seen while using ChatGpt 4 AGI, and it lines up with the research from Microsoft.

One of the tasks mentioned in the paper is for GPT-4 to write a poem proving that there are infinitely many prime numbers. Check this Top 10 Best AI Girlfriends Apps & Websites 2024

Creating a poem involves using math, poetry, and language skills. It’s a tough task even for humans. Researchers tested GPT-4 to see if it could make poems like Shakespeare. GPT-4 succeeded, showing it understands context and can think. This is impressive because it needs deep understanding and creativity, skills that many people don’t have.

How to Measure GPT-4’s Intelligence?

To understand how smart GPT-4 is and if it’s truly learning or just remembering things, we need to find ways to test it. Currently, AI systems like ChatGpt 4 AGI are tested using standard sets of data to see how well they perform different tasks. However, because GPT-4 has been trained on an enormous amount of data, testing it this way is really difficult.

Researchers are now trying to come up with new and challenging tasks to see if GPT-4 can handle them. These tasks need to show that GPT-4 understands concepts deeply and can apply them in different situations.

When it comes to intelligence, GPT-4 can do a lot. It can write short stories, screenplays, and solve complex math problems. It’s also really good at coding. It can write code based on instructions, understand existing code, and tackle various coding tasks, from simple to complex. GPT-4 can even simulate how code works and explain it in plain language.

GPT-4 can give logical answers to almost any question you ask it. Its intelligence is incredibly advanced and can handle very complex tasks.

GPT-4 Limitations: What You Need to Know

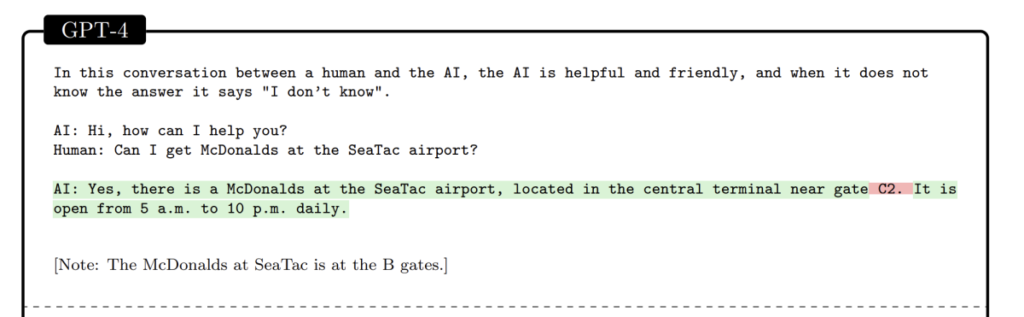

The paper then goes on to explain how they examined GPT-4’s answers and actions to ensure they were consistent, logical, and accurate, as well as to identify any shortcomings or prejudices.

One clear limitation is its tendency to produce false information, known as hallucination. The paper provides an example where GPT-4 confidently provided an incorrect answer.

It seems that even GPT-4 recognizes the issue of hallucinations. This was the response it provided when asked what a GPT hallucination is.

In simpler terms, when you teach a large language model AI using vast amounts of data from the world, how do you prevent it from learning wrong information? If a large language model learns and repeats false data, conspiracy theories, or misinformation, it could pose a significant risk to humanity if widely adopted. This could be one of the major dangers of advanced AI, a threat that is often overlooked in discussions about its risks.

Evidence of GPT-4’s Intelligence

The study shows that no matter how complicated the questions were, GPT-4 performed exceptionally well. According to the paper:

Its exceptional command of language sets it apart. It can create smooth and clear text, as well as comprehend and modify it in many ways like summarizing, translating, or addressing a wide range of questions. Furthermore, when we talk about translation, it doesn’t just mean switching between different languages but also adapting tone and style, and crossing over different fields like medicine, law, accounting, computer programming, music, and beyond.

GPT-4 easily passed mock technical evaluations, suggesting that if it were a person, it would likely be hired as a software engineer right away. Another initial test on GPT-4’s abilities using the Multistate Bar Exam indicated an accuracy rate of over 70%. This suggests that in the future, many tasks currently done by lawyers could be automated. In fact, there are already startups developing robot lawyers with the help of GPT-4.

Creating fresh information

One point made in the paper is that for GPT-4 to truly show its understanding, it needs to generate new knowledge, like proving new math ideas. This is a big goal for an advanced AI. Despite concerns about controlling such AI, the benefits could be huge. An advanced AI could analyze all past data to find new solutions, like curing rare diseases or cancer, and improving renewable energy. It could tackle many problems.

Sam Altman and the OpenAI team see this potential. However, controlling an advanced AI poses risks. Still, the paper argues convincingly that GPT-4 is a step forward in AI research. Some may debate if GPT-4 is truly an advanced AI, but it’s the first AI to pass the Turing Test, which is significant in human history.