UK startup Fabula AI has developed a way for artificial intelligence to help social media platforms manage the spread of disinformation. The goal is to address the frequent issues social media faces with misleading content.

Even Facebook’s Mark Zuckerberg has expressed doubts about AI’s ability to fully grasp the complex and often messy nature of human communication on social media, whether it’s well-meaning or harmful.

Fabula AI Algorithm

News often requires understanding political or social context and common sense, which current natural language processing algorithms lack.

Fabula says they have developed a new type of machine learning algorithm called Geometric Deep Learning. These algorithms use convolutional neural networks to learn patterns in complex data sets like social networks. Fabula’s Chief Scientist, Michael Bronstein, has co-authored a paper on this new algorithm with Yann Lecun, Director of AI Research at Facebook. Read this also Feedly AI Real Time Analyze Information Tool 2024

Even the founder of Facebook admitted two years ago that properly controlling content on their massive platform is a huge challenge. They said it would take a long time to develop systems that can truly “understand” what people are posting.

But what if we don’t need AI to literally read and grasp the meaning of everything? Maybe there’s another way to identify fake news.

- New tool to fight online confusion: A company called Fabula AI developed a new way to spot unreliable information online. They call it “Geometric Deep Learning” and it’s designed to handle the massive amounts of data on social media.

- Traditional methods struggle: Usual approaches for analyzing information have trouble with such complex data.

- Fabula AI claims a breakthrough: They believe their method can learn patterns in this messy data and identify misleading content.

- Beyond “fake news”: While Fabula AI uses “fake news” in their promotion, they aim to tackle both deliberate lies (disinformation) and accidental sharing of inaccurate information (misinformation). This could be anything from a fake photo to a real image used in the wrong way.

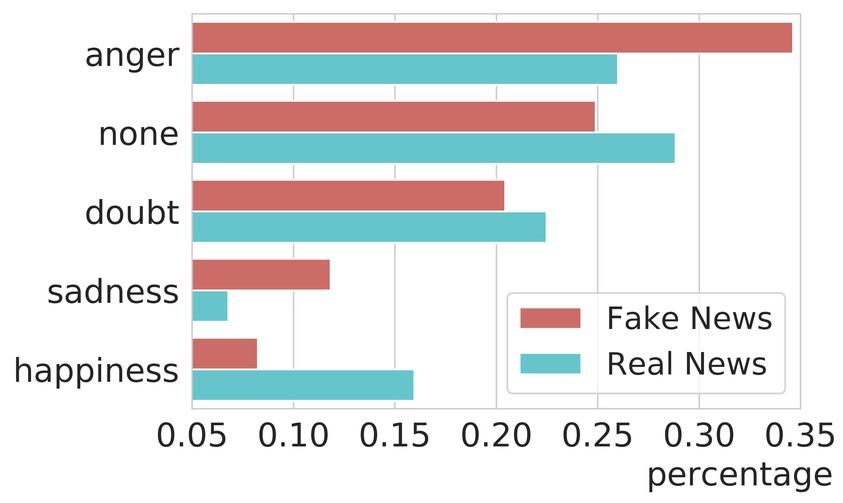

- Fabula AI fights fakes differently: Instead of analyzing the content itself, Fabula AI focuses on how information spreads on social media. They believe “fake news” and real news have distinct spreading patterns.

- Learning from patterns: Fabula’s system, called “geometric deep learning,” can analyze these social network patterns. It considers things like who shares the information and how it travels.

- Fake news like a disease? Fabula AI compares the spread of fake news to how illnesses move through a population. Just like germs spread quickly between people who are close, fake news might race through social circles that share similar beliefs.

- Humans, not just bots: While some spread fake news on purpose (disinformation), others might do it accidentally (misinformation). The study Fabula AI references suggests people themselves are a big reason false information travels fast.

- Spotting BS with AI: This “unfortunate human quirk” of sharing inaccurate things might actually help identify it! Fabula’s AI tool is under development, but it aims to use these spreading patterns to spot misleading content on social media.

- Testing and future plans: Fabula’s AI has been tested internally on Twitter data. They plan to offer it to other platforms and publishers later this year. The AI would run in real-time, giving content platforms a tool to identify potentially false information.

- Scoring truth: Fabula AI wants to be a “truth-risk scoring platform.” Their AI would score information based on how likely it is to be fake news. This is similar to a credit score, but instead of rating a person’s finances, it would rate the trustworthiness of information.

In its tests, Fabula AI says its algorithms identified 93 percent of “fake news” within hours of it spreading. This accuracy is much higher than other methods. They measured this using a standard method called ROC AUC. Read this also WannaFake : The Ultimate Face-Swapping Tool

The dataset used to train the model included around 250,000 Twitter users and 2.5 million social connections. Fabula AI relied on fact-checking labels from organizations like Snopes and PolitiFact. It took months to gather the data, using about a thousand stories to train the model. The team believes the approach works for both small social networks and large ones like Facebook.

To ensure the model wasn’t just detecting patterns from bot-spread news, they included verified true users in their training data. Research shows bots didn’t play a major role in spreading fake news, as they were only responsible for a small percentage of it. Bots can be detected through connectivity or content analysis, which their methods also cover.

The team also tested the model’s performance over time by training it on past data and then testing it with different data splits.